Book a 30-minute demo and learn how Kula can help you hire faster and smarter with AI and automation

Many saw the rise of AI in recruitment as a boon for efficiency.

On one hand, it allowed recruiters to screen hundreds of resumes in minutes, freeing valuable time to engage with suitable top candidates, reducing overall time to hire.

On the other hand, there was a potential for AI bias to seep into the hiring process. The failure of Amazon's automated recruitment system is a prime example of this. Although Amazon scrapped the system that disfavored female candidates, many more such cases of AI bias in hiring and recruitment emerged.

In fact, 49% of employed job seekers say AI hiring tools are more prone to bias than humans alone.

In this article, we will explore the reasons behind AI bias in recruitment and understand actionable strategies and measures recruiters can take to mitigate them.

How is AI bias impacting hiring?

Let's face it: bias exists even in the traditional hiring process. AI automates and potentially amplifies these biases.

There are different types of AI bias that commonly occur. Understanding them can help identify how AI affects various areas of the recruitment process.

Sample bias

If the training data used to develop the AI focuses on a restricted pool of candidates, the resulting algorithm may struggle to fairly evaluate those outside that pool.

For instance, an AI trained on resumes from a specific industry might not be equipped to assess candidates with experience in a different sector.

Algorithmic bias

The way the AI interprets data can introduce bias. For example, an algorithm looking for leadership skills might favor candidates who use powerful language or quantifiable achievements and disadvantage those with strong communication skills expressed differently.

Applicant tracking systems (ATS) are often the first hurdle in the hiring process. Amazon's hiring algorithm (which is not active anymore) discovered that it favored applicants based on words such as "executed" or "captured." Often associated with a more aggressive leadership style, these terms were more commonly found on men's resumes. This highlights a concerning issue with natural language processing (NLP) algorithms: they can pick up on subtle gendered language patterns, putting qualified candidates who express their skills differently at a disadvantage.

Representation bias

If a particular demographic is under-represented in the training data, the AI may not be equipped to properly evaluate candidates from that group. 50% of people have said that their gender, ethnicity, and race have made it hard for them to land a job, and most AI recruitment systems have been shown to have a bias against them.

This is also very similar to selection or stereotyping bias. In such cases, the AI system favors certain candidates over others based on irrelevant or unfair factors, such as demographic information, ethnicity, educational background, gender, or work experience.

Independent research at Carnegie Mellon University revealed a surprising trend. Google's online advertising system displayed high-paying job advertisements more frequently to male candidates than females, even though the positions were open to all genders.

Sometimes, the AI recruitment tool may also assign certain traits, abilities, or preferences to candidates based on group membership or stereotypes. For example, if the algorithm assumes that candidates from a particular demographic lack technical proficiency, it may unfairly disadvantage qualified individuals.

Measurement bias

The criteria used to measure success can be biased. For example, an algorithm focusing on years of experience might disregard a highly skilled candidate with a non-traditional career path. These metrics or criteria may not be relevant or valid predictors of job performance or success.

These instances of AI bias show how it can start at the early stages of the hiring process, potentially limiting the talent pool before candidates even submit their applications.

How to reduce the effects of AI bias in hiring

When it comes to human hiring, emotional biases exist, and AI is biased because of their training data. This potentially leads to unfair hiring practices. To address these concerns and to mitigate AI bias for a diverse talent pipeline, here are a few things you can do:

Data diversity in training data

The first step to a fairer recruitment process without eliminating AI is to improve the AI training data.

- Ensure that the training data used to develop the AI system is diverse and representative of the population. This includes diversity in terms of gender, race, ethnicity, age, socioeconomic background, etc.

- Review your existing candidate data for biases. Some features in the data might correlate with protected attributes (like race or gender) and could lead to biased outcomes. Remove or mitigate the impact of such features in the model.

- As you use your AI recruitment system, track the types of candidates selected and address any emerging representation gaps.

- Use techniques such as adversarial debiasing. This technique involves training a model to simultaneously predict the target variable (e.g., hiring decision) while also trying to confuse an adversary tasked with predicting the protected attributes (e.g., race or gender). The model also learns to make decisions independent of these protected attributes by minimizing the adversary's ability to predict them accurately.

Algorithmic updates and review

- Partner with data scientists or AI specialists to conduct regular audits of your AI hiring tools. These audits should investigate potential bias in the algorithms and the data they're trained on.

- Consider AI solutions with Explainable AI (XAI) features to understand the reasoning behind the AI's decisions, helping to identify and address bias.

- You can also implement fairness-aware algorithms. This aims to incorporate fairness considerations directly into the model training process. These fairness metrics could include demographic parity, equal opportunity, disparate impact, etc.

Human oversight

AI recruiting systems do streamline the initial screening process and free up time for recruiters and hiring managers to focus on qualified candidates and build relationships with top talent. But, AI system-generated results shouldn't make the final call.

- Establish a clear human review process where qualified individuals assess top candidates identified by the AI. This allows for a holistic evaluation, considering factors beyond what an algorithm can capture

Another important factor in understanding the level of human oversight required is analyzing the transparency of your AI hiring solutions. Black box AI solutions offer limited transparency around their decision-making processes. This means you will lack the necessary visibility into how the AI arrives at its recommendations, making it difficult to understand the criteria and evaluate its effectiveness.

On the flip side, if you use glass box AI, you get valuable insights into how the AI algorithms make decisions. They let you understand the factors and data that influence candidate assessments. This transparency also allows for meaningful human oversight and helps ensure fairness in the hiring process.

- Consider incorporating blind hiring practices into your AI workflow. This could involve anonymizing resumes before the AI analyzes them, removing names, schools, and other potentially biasing information

While anonymization within the AI itself might be technically complex, some platforms allow for blind review by human reviewers after the AI has narrowed down the candidate pool.

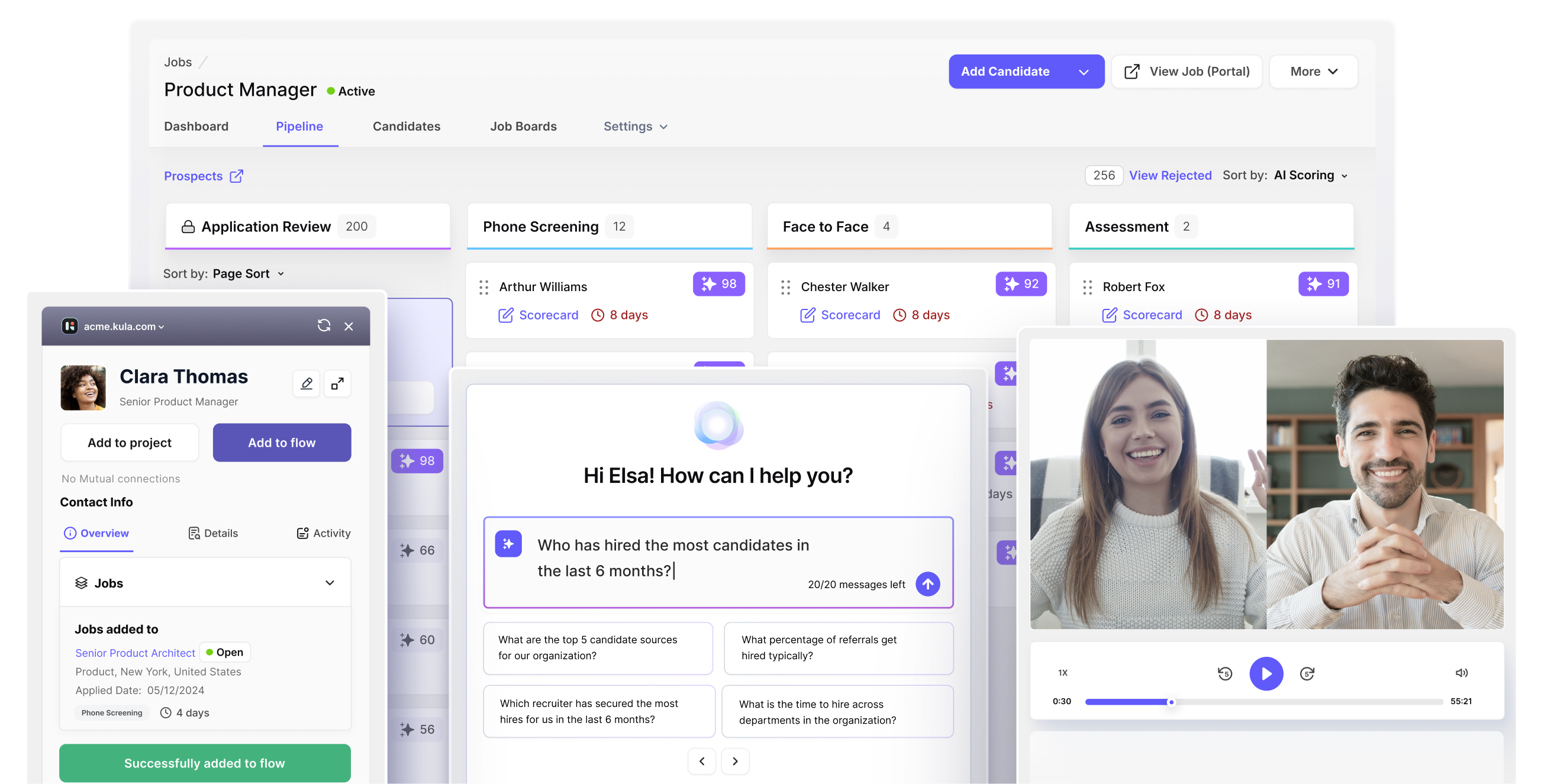

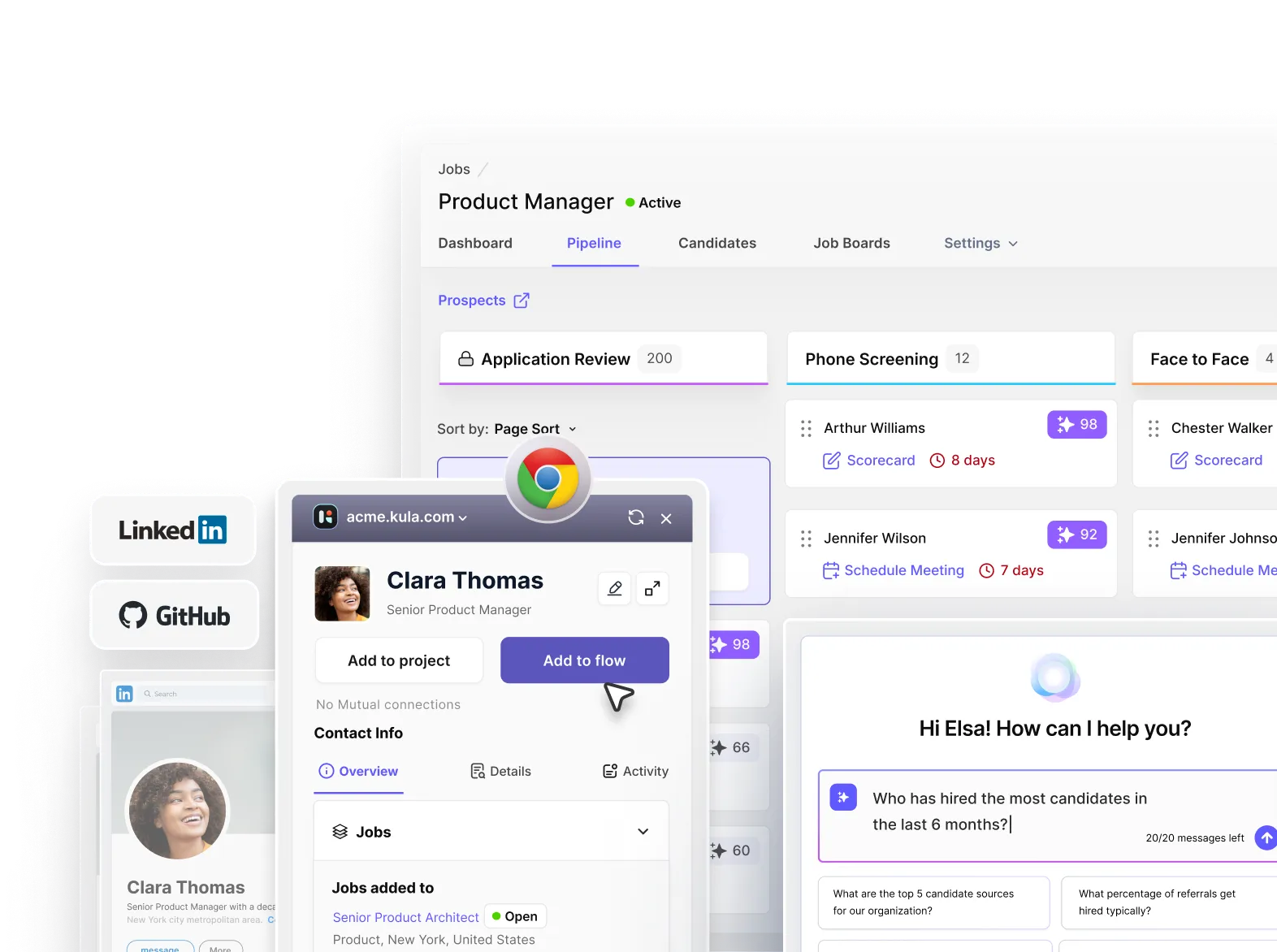

Kula ATS offers features such as anonymization in grading candidate assessments and displaying candidate source data to interviewers, reducing the risk of biased decision-making. This two-pronged approach has the strengths of both AI and human judgment.

Diverse hiring teams and bias-free applicant tracking system

Having a diverse hiring team and selecting the right AI recruitment software is critical in reducing AI bias in recruitment.

- A diverse hiring team brings together individuals with different backgrounds, experiences, and viewpoints. These diverse perspectives can help identify and address biases that may not be apparent to a homogeneous team. This also encourages questioning assumptions and challenging the status quo.

- Choose a reputable ATS software with AI that has transparent practices regarding AI algorithms, data sources, and model evaluation methods.

Kula ATS stands out by promoting diversity, equity, and inclusion in the hiring process. The platform offers features such as anonymization in grading candidate assessments and displaying candidate source data to interviewers, reducing the risk of biased decision-making.

- A good AI recruiting software offers customization and flexibility. This allows organizations to customize AI recruitment systems to their specific needs and preferences.

Kula's platform allows recruiters to make this happen and goes further by incorporating reminders for interviewers and stakeholders to prioritize diversity and inclusion throughout the hiring process.

With 1-click job distribution to diversity-focused job boards, including those targeting military, women, and minority candidates, Kula helps organizations reach a more diverse pool of applicants.

Reducing AI bias in hiring involves various methods, but these are among the most important. If your recruitment software shows signs of AI bias, it's crucial to pinpoint the main issues and tackle them using any of the methods mentioned above.

Reduce AI bias in hiring with Kula’s all-in-one ATS

As we've explored, AI algorithms can inherit biases from their training data, leading to unfair hiring practices and limiting your access to top talent.

The consequences of unchecked AI bias are far-reaching. It can overlook qualified candidates from diverse backgrounds, hindering your ability to build a truly representative workforce.

Furthermore, the EEOC's recent guidelines emphasize the legal and ethical responsibility to ensure fairness in AI-powered hiring decisions.

The good news is that by taking proactive measures, you can use AI as a force for good in your recruitment process. Importantly, AI, when used responsibly, can expand your talent pool, identifying qualified individuals who might have previously been overlooked.

Kula ATS understands the legal and ethical implications of AI bias in hiring. The platform is designed to support inclusive recruitment practices, as we shared in this article.

So, if you are ready to build a more diverse and qualified workforce with the power of AI - Contact us today and explore the potential of responsible AI hiring.